Download our e-book of Introduction To Python

Related Blog

Matplotlib - Subplot2grid() FunctionDiscuss Microsoft Cognitive ToolkitMatplotlib - Working with ImagesMatplotlib - PyLab moduleMatplotlib - Working With TextMatplotlib - Setting Ticks and Tick LabelsCNTK - Creating First Neural NetworkMatplotlib - MultiplotsMatplotlib - Quiver PlotPython - Chunks and Chinks View More

Top Discussion

How can I write Python code to change a date string from "mm/dd/yy hh: mm" format to "YYYY-MM-DD HH: mm" format? Which sorting technique is used by sort() and sorted() functions of python? How to use Enum in python? Can you please help me with this error? I was just selecting some random columns from the diabetes dataset of sklearn. Decision tree is a classification algo...How can it be applied to load diabetes dataset which has DV continuous Objects in Python are mutable or immutable? How can unclassified data in a dataset be effectively managed when utilizing a decision tree-based classification model in Python? How to leave/exit/deactivate a Python virtualenvironment Join Discussion

Top Courses

Webinars

RMSprop: In-depth Explanation

Neha Kumawat

2 years ago

Table of Content

- Brief idea behind Rmsprop

- RMSProp Implementation in Python

- How RMSProp tries to resolve Adagrad’s problem

In

my previous article “Optimizers in Machine Learning and Deep Learning.”

I gave a brief introduction about RMSprop optimizers. In this article, I will

try to give an in-depth explanation of the optimizer’s algorithm.

If

you didn’t read my previous articles. I recommend you to first go through my

previous articles on optimizers mentioned below and then come back to this

article for better understanding:

Optimizers in Machine Learning and Deep Learning

Gradient descent Algorithm: In-Depth explanation

Adagrad and Adadelta optimizers

Let’s

start with a brief idea behind Rmsprop

RMSProp

stands for Root

Mean Square Prop, which is an adaptive learning rate

optimization algorithm proposed by Geoff Hinton in lecture 6 of the

online course “Neural Networks for Machine

Learning”.

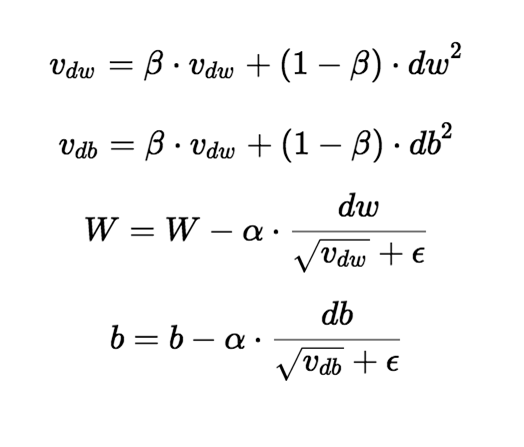

The core idea behind RMSprop is to keep the

moving average of the squared gradients for each weight. And then divide the

gradient by square root of the mean square. That’s why it’s called RMSprop

(root mean square).

RMSProp tries to resolve Adagrad’s radically diminishing

learning rates by using a moving average of the squared gradient. It

utilizes the magnitude of the recent gradient descents to normalize the

gradient.

Adagrad

will accumulate all previous gradient squares, and RMSprop just calculates the

corresponding average value, so it can alleviate the problem that the learning

rate of the Adagrad algorithm drops quickly.

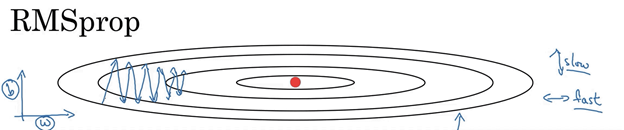

The

main difference is that RMSProp calculates the differential

squared weighted average of the gradient. This method is

beneficial to eliminate the direction of large swing amplitude and is used to

correct the swing amplitude so that the swing amplitude in each dimension is

smaller. On the other hand, it also makes the network function converge faster.

In RMSProp learning rate gets adjusted automatically and it

chooses a different learning rate for each parameter.

RMSProp divides the learning rate by the average of the

exponential decay of squared gradients.

Now, as you got a brief idea about the RMSprop optimizer. Let me now

explain you the intuition behind RMSprop.

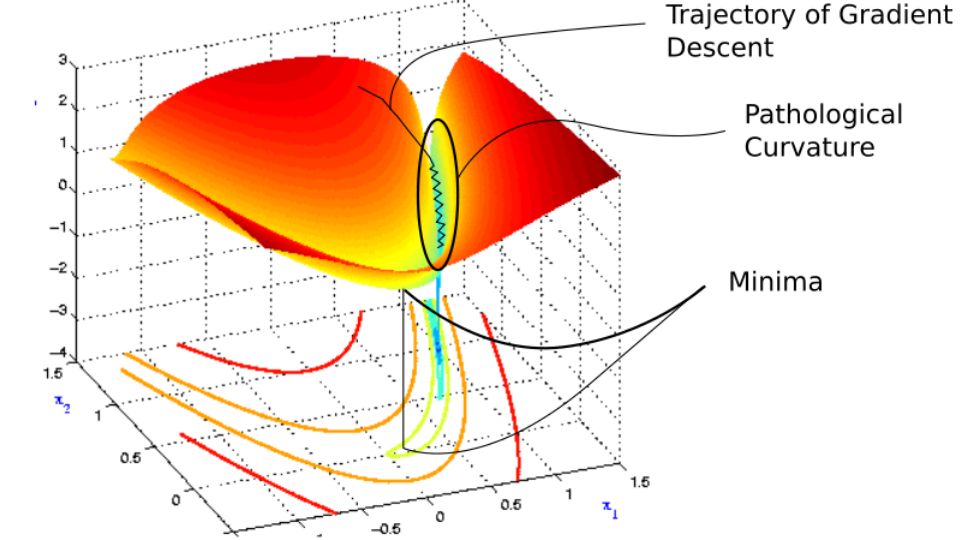

Let’s try to understand in a simple

way. We can say that the RMSprop optimizer is similar to the gradient descent

algorithm with momentum.

In the RMSprop optimizer, it tries to

restrict the oscillations in the vertical direction, which in turn helps us to

increase our learning rate and so that our algorithm could take larger steps in

the horizontal direction and converge fast. The main difference between RMSprop

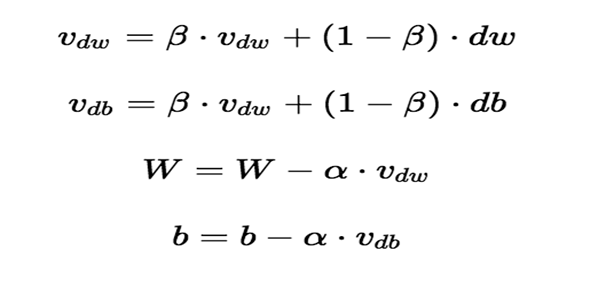

and gradient descent is how we calculate the gradients for them. From the

below-mentioned equations we can see how the gradients can be calculated for

the RMSprop and gradient descent with momentum. Here, the value of momentum is

denoted by beta and which is usually set to 0.9 most of the time.

So, from the above equation, we can see how both the equation

is almost similar, only the difference between them is how we calculate

gradient for both of them and how we update the weights and bias for them.

RMSProp Implementation in Python

We can simply define a function for RMSProp as shown

below:

def rmsprop():

w, b, eta = init_w, init_b, 0.1

vw, vb, beta, eps = 0, 0, 0.9, 1e-9

for i in range(max_epochs):

dw, db = 0, 0

for x,y in zip(X,Y):

dw += grad_w(w, b, x, y)

db += grad_b(w, b, x, y)

vw = beta * vw + (1 - beta) * dw**2

vb = beta * vb + (1 - beta) * db**2

w = w - (eta/np.sqrt(v_w + eps)) * dw

b = b - (eta/np.sqrt(v_b + eps)) * db

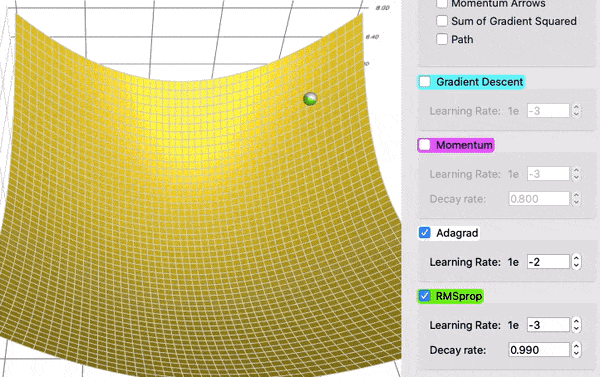

To see the effect of the decaying. Let’s compare them

AdaGrad (white) keeps up with RMSProp (green) initially, as expected with the

tuned learning rate and decay rate. But we can see from the above animation

that the sums of gradient squared for AdaGrad get accumulate

so fast that they soon become humongous (shown by the sizes of the squares in

the animation). They harm and eventually AdaGrad practically stops moving.

But RMSProp, on the other hand, tries to keep the

squares under a manageable size whole time with the help of the decay rate.

This makes RMSProp faster than AdaGrad.

I hope after reading this article, finally, you

came to know about what is Rmsprop, how it works? and What’s the difference

between RMSprop and Adagrad optimizer algorithms and its importance. In the

next articles, I will come with a detailed explanation of some other types of

optimizers. For more blogs/courses on data science, machine learning,

artificial intelligence, and new technologies do visit us at InsideAIML.

Thanks for

reading…