Download our e-book of Introduction To Python

Related Blog

Matplotlib - Subplot2grid() FunctionDiscuss Microsoft Cognitive ToolkitMatplotlib - Working with ImagesMatplotlib - PyLab moduleMatplotlib - Working With TextMatplotlib - Setting Ticks and Tick LabelsCNTK - Creating First Neural NetworkMatplotlib - MultiplotsMatplotlib - Quiver PlotPython - Chunks and Chinks View More

Top Discussion

How can I write Python code to change a date string from "mm/dd/yy hh: mm" format to "YYYY-MM-DD HH: mm" format? Which sorting technique is used by sort() and sorted() functions of python? How to use Enum in python? Can you please help me with this error? I was just selecting some random columns from the diabetes dataset of sklearn. Decision tree is a classification algo...How can it be applied to load diabetes dataset which has DV continuous Objects in Python are mutable or immutable? How can unclassified data in a dataset be effectively managed when utilizing a decision tree-based classification model in Python? How to leave/exit/deactivate a Python virtualenvironment Join Discussion

Top Courses

Webinars

Natural Language Processing using NLTK package

Neha Kumawat

2 years ago

Table of Contents

- Text Analytics

- What is Natural Language Processing (NLP)?

- What is NLTK?

- Tokenization

1. Sentence Tokenize

2. Word Tokenize

3. How to implement it in Python

- POS Tags and Chunking

1. Parts of Speech Tagging (POS tags)

2. Chunking

- Stop Words

- Stemming and Lemmatization

1. Stemming

2. Lemmatization

- Named Entity Recognition (NER)

- Graph

- Parsing

Text Analytics

As we know there are different types of data and different

ways of processing them to obtain important information out of them, in this

section we will talk about one of the most sought upon data in today’s time.

Customer Information is the backbone for most of the renowned

companies in the 21st century. Let’s take the example of Google. How do you suppose

Google is providing so many services for free? What profit does it make by

giving you free services? Well, it’s your information that google sells to

different companies by analyzing your searches and makes a profit through it. The

moment you search for a product on Google, you will start seeing the product

recommendation on every website. This is the most basic usage of text

analytics, product recommendation to customers by analyzing their searches.

Similarly, many companies analyze the positive or negative

reviews given by customers and try to predict customer behavior. There is a

wealth of such unstructured data present such as emails, google searches,

online surveys, twitter, online reviews, etc. which can be processed using text

analysis. Many key information about people, customers can be derived by

processing the unstructured text and analyzing it.

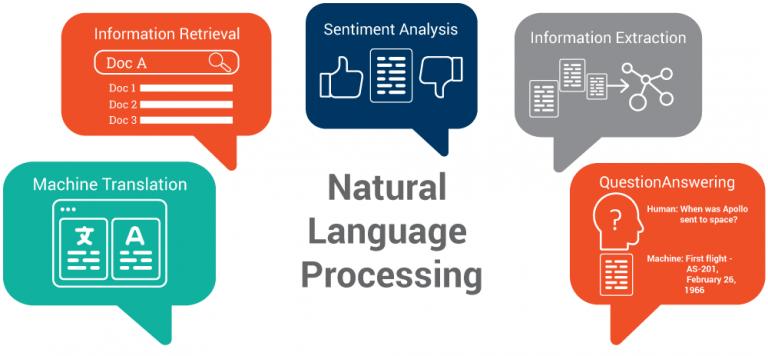

What is Natural Language Processing (NLP)?

NLP

is a part of Artificial Intelligence, developed for the machine to understand

human language. The ultimate goal of NLP is to read, understand, and make

valuable conclusions of human language. It is a very tough job to do as the human

language has a lot of variation in terms of language, pronunciation, etc.

Although, in recent times there has been a major breakthrough in the field of

NLP.

Siri

and Alexa is one such example of uses of NLP.

We

will use NLP for text analytics.

There are many libraries available for NLP in python such as:

- Natural Language Tool Kit (NLTK)

- Spacy

- Gensim

- Polyglot

- TextBlob

- CoreNLP

- Pattern

- Vocabulary

- PyNLPI

- Query

As NLP is a very

vast topic so one should take step by step methods to learn it. So, in this

article, I will try to give you a brief introduction about how we can use the NLTK

package.

Later, in my

other articles I will take you through some of the other most used NLP libraries

such as Spacy, Gensim, etc. So, let’s start.

What is NLTK?

NLTK stands for Natural Language Tool Kit, a leading platform

for building Python programs to work with human language data. It provides

easy-to-use interfaces to over 50 corpora and lexical resources such as

WordNet, along with a suite of text processing libraries for classification,

tokenization, stemming, etc. This library provides a practical introduction to

programming for language processing. NLTK has been called “a wonderful tool for

teaching and working in computational linguistics using Python,” and “an

amazing library to play with natural language.”

Before we dive into text analytics using NLTK. Let's

understand about some important terminologies used in natural language

processing (NLP):

Tokenization

Tokenization

is a process of breaking down a given paragraph of text into a list of sentences

or words. When the paragraph is broken down into a list of sentences, it is called

sentence tokenization. Similarly, if the sentences are further broken down into a list of words, it is known as Word tokenization.

Let's

understand this with an example. Below is a given paragraph, let's see how

tokenization works on it:

"Data

science is an inter-disciplinary field that uses scientific methods, processes,

algorithms and systems to extract knowledge and insights from many structural

and unstructured data. Data science is related to data mining, machine learning

and big data. Data science is a concept to unify statistics, data analysis,

machine learning, domain knowledge and their related methods in order to

understand and analyze actual phenomena with data. It uses techniques and

theories drawn from many fields within the context of mathematics, statistics,

computer science, domain knowledge and information science. Turing award winner

Jim Gray imagined data science as a fourth paradigm of science (empirical,

theoretical, computational and now data-driven) and asserted that everything

about science is changing because of the impact of information technology and

the data deluge"

Sentence Tokenize

Below is the example of sentence tokenize:

['Data', 'science', 'is', 'an', 'inter-disciplinary', 'field', 'that', 'uses', 'scientific',

'methods', ',', 'processes', ',', 'algorithms', 'and', 'systems', 'to', 'extract', 'knowledge',

'and', 'insights', 'from', 'many', 'structural', 'and', 'unstructured', 'data', '.', 'Data',

'science', 'is', 'related', 'to', 'data', 'mining', ',', 'machine', 'learning', 'and', 'big',

'data', '.', 'Data', 'science', 'is', 'a', 'concept', 'to', 'unify', 'statistics', ',', 'data',

'analysis', ',', 'machine', 'learning', ',', 'domain', 'knowledge', 'and', 'their', 'related',

'methods', 'in', 'order', 'to', 'understand', 'and', 'analyze', 'actual', 'phenomena', 'with', 'data',

'.', 'It', 'uses', 'techniques', 'and', 'theories', 'drawn', 'from', 'many', 'fields',

'within, 'the', 'context', 'of', 'mathematics', ',', 'statistics', ',', 'computer',

'science', ',', 'domain', 'knowledge', 'and', 'information', 'science', '.', 'Turing',

'award', 'winner', 'Jim', 'Gray', 'imagined', 'data', 'science', 'as', 'a', 'fourth',

'paradigm', 'of', 'science',

'(', 'empirical', ',', 'theoretical', ',', 'computational', 'and', 'now', 'data-driven', ')',

'and', 'asserted', 'that', 'everything', 'about', 'science', 'is', 'changing', 'because',

'of', 'the', 'impact', 'of', 'information', 'technology', 'and', 'the', 'data', 'deluge']

Word Tokenize

Below is the example of Word tokenize:

['Data', 'science', 'is', 'an', 'inter-disciplinary', 'field', 'that', 'uses', 'scientific',

'methods', ',', 'processes', ',', 'algorithms', 'and', 'systems', 'to', 'extract', 'knowledge',

'and', 'insights', 'from', 'many', 'structural', 'and', 'unstructured', 'data', '.', 'Data',

'science', 'is', 'related', 'to', 'data', 'mining', ',', 'machine', 'learning', 'and', 'big',

'data', '.', 'Data', 'science', 'is', 'a', 'concept', 'to', 'unify', 'statistics', ',', 'data',

'analysis', ',', 'machine', 'learning', ',', 'domain', 'knowledge', 'and', 'their', 'related',

'methods', 'in', 'order', 'to', 'understand', 'and', 'analyze', 'actual', 'phenomena', 'with', 'data',

'.', 'It', 'uses', 'techniques', 'and', 'theories', 'drawn', 'from', 'many', 'fields', 'within, 'the',

'context', 'of', 'mathematics', ',', 'statistics', ',', 'computer', 'science', ',', 'domain', 'knowledge',

'and', 'information', 'science', '.', 'Turing', 'award', 'winner', 'Jim', 'Gray', 'imagined', 'data',

'science', 'as', 'a', 'fourth', 'paradigm', 'of', 'science',

'(', 'empirical', ',', 'theoretical', ',', 'computational', 'and', 'now', 'data-driven', ')',

'and', 'asserted', 'that', 'everything', 'about', 'science', 'is', 'changing', 'because', 'of',

'the', 'impact', 'of', 'information', 'technology', 'and', 'the', 'data', 'deluge']

Hope this example clears up the concept of tokenization. We

will understand why it is done when we will dive into text analysis.

How to implement it in Python

It's pretty

simple, only you need to import the method and apply it. Let’s see how?

# Tokenizing using NLTK

import nltk

data = "Data

science is an inter-disciplinary field that uses scientific methods, processes,

algorithms and systems to extract knowledge and insights from many structural

and unstructured data. Data science is related to data mining, machine learning

and big data. Data science is a concept to unify statistics, data analysis,

machine learning, domain knowledge and their related methods in order to

understand and analyze actual phenomena with data. It uses techniques and

theories drawn from many fields within the context of mathematics, statistics,

computer science, domain knowledge and information science. Turing award winner

Jim Gray imagined data science as a fourth paradigm of science (empirical,

theoretical, computational and now data-driven) and asserted that everything

about science is changing because of the impact of information technology and

the data deluge"

# sentence tokenizes

nltk.sent_tokenize(data)

The result is

shown below:

['Data science is an inter-disciplinary field that uses scientific methods,

processes, algorithms and systems to extract knowledge and insights from many

structural and unstructured data.',

'Data science is related to data mining, machine learning and big data.',

'Data science is a concept to unify statistics, data analysis, machine learning,

domain knowledge and their related methods in order to understand and analyze actual

phenomena with data.',

'It uses techniques and theories drawn from many fields within the context of mathematics,

statistics, computer science, domain knowledge and information science.',

'Turing award winner Jim Gray imagined data science as a fourth paradigm of

science (empirical, theoretical, computational and now data-driven) and asserted

that everything about science is changing because of the impact of information

technology and the data deluge']

# word tokenizes

nltk.word_tokenize(data)

The result is

shown below:

['Data', 'science', 'is', 'an', 'inter-disciplinary', 'field', 'that', 'uses',

'scientific', 'methods', ',', 'processes', ',', 'algorithms', 'and', 'systems',

'to', 'extract', 'knowledge', 'and', 'insights', 'from', 'many', 'structural',

'and', 'unstructured', 'data', '.', 'Data', 'science', 'is', 'related', 'to', 'data',

'mining', ',', 'machine', 'learning', 'and', 'big', 'data', '.', 'Data', 'science',

'is', 'a', 'concept', 'to', 'unify', 'statistics', ',', 'data', 'analysis', ',',

'machine', 'learning', ',', 'domain', 'knowledge', 'and', 'their', 'related',

'methods', 'in', 'order', 'to', 'understand', 'and', 'analyze', 'actual', 'phenomena', 'with', 'data',

'.', 'It', 'uses', 'techniques', 'and', 'theories', 'drawn', 'from', 'many',

'fields', 'within, 'the', 'context', 'of', 'mathematics', ',', 'statistics', ',',

'computer', 'science', ',', 'domain', 'knowledge', 'and', 'information', 'science', '.',

'Turing', 'award', 'winner', 'Jim', 'Gray', 'imagined', 'data', 'science', 'as', 'a',

'fourth', 'paradigm', 'of', 'science',

'(', 'empirical', ',', 'theoretical', ',', 'computational', 'and', 'now', 'data-driven', ')',

'and', 'asserted', 'that', 'everything', 'about', 'science', 'is', 'changing',

'because', 'of', 'the', 'impact', 'of', 'information', 'technology', 'and', 'the', 'data', 'deluge']

Now,

let’s get our hands dirty with NLTK. In this section, we will learn to perform

various NLP tasks using NLTK. We will start off with the popular NLP tasks of

Part-of-Speech Tagging, Dependency Parsing, and Named Entity Recognition.

POS Tags and Chunking

Parts of Speech Tagging (POS tags)

As

the name suggests, it is a method of tagging individual words on the basis of

its parts of speech.

In English grammar,

the parts of speech tell us what is the function of a word and how it is used

in a sentence. Some of the

common parts of speech in English are Noun, Pronoun, Adjective, Verb, Adverb,

etc.

There are 9 parts of

speech in grammars, but in NLP there are more than 9 POS tags based on a different set of rules, such as:

- NN noun, singular 'table'

- NNS noun plural 'tables'

- NNP proper noun, singular

- NNPS proper noun, plural

There

are 4 types of division for noun only. Similarly, there are multiple divisions

for other parts of speeches.

Let’s

take a small example and try to understand it.

text ='He wants to play football'

pos = nltk.pos_tag(nltk.word_tokenize(text))

pos

output:

[('He', 'PRP'),

('wants', 'VBZ'),

('to', 'TO'),

('play', 'VB'),

('football', 'NN')]

Do you observe

how simple and easy it is to get part of speech of our text data is using NLTK.

We can also see how NLTK correctly recognizes the noun, verb, pronoun etc. in

the taken example.

Below I am listing

out different types of POS in NLTK which will be handy for you.

POS tag list

- CC coordinating conjunction

- CD cardinal digit

- DT determiner

- EX existential there (like: "there is" ... think of it like "there exists")

- FW foreign word

- IN preposition/subordinating conjunction

- JJ adjective 'big'

- JJR adjective, comparative 'bigger'

- JJS adjective, superlative 'biggest'

- LS list marker 1)

- MD modal could, will

- NN noun, singular 'desk'

- NNS noun plural 'desks'

- NNP proper noun, singular 'Harrison'

- NNPS proper noun, plural 'Americans'

- PDT predeterminer 'all the kids'

- POS possessive ending parent's

- PRP personal pronoun I, he, she

- PRP$ possessive pronoun my, his, hers

- RB adverb very, silently,

- RBR adverb, comparative better

- RBS adverb, superlative best

- RP particle give up

- TO to go 'to' the store.

- UH interjection errrrrrrrm

- VB verb, base form takes

- VBD verb, past tense took

- VBG verb, gerund/present participle taking

- VBN verb, past participle taken

- VBP verb, sing. present, non-3d take

- VBZ verb, 3rd person sing. present takes

- WDT wh-determiner which

- WP wh-pronoun who, what

- WP$ possessive wh-pronoun whose

- WRB wh-abverb where, when

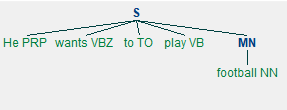

Chunking

After

using parts of speech, Chunking can be used to make data more structured by

giving a specific set of rules. Chunking is also known as shallow parser. Let's

understand more about chunking by following example:

text =' He wants to play football '

pos =

nltk.pos_tag(nltk.word_tokenize(text))

# now once the POS tag has been done.

Let's say we want to further structure data such that Nouns are categorized

under one specific node defined by us :

my_node = "MN:

{<NNP>*<NN>}"

chunk = nltk.RegexpParser(my_node)

result = chunk.parse(pos)

print(result)# It will draw the pattern graphically

which can be seen in Noun Phrase chunking

result.draw()

Output:

(S

We/PRP

will/MD

see/VB

an/DT

(MN example/NN)

of/IN

(MN POS/NNP tagging/NN)

./.)

Graphical

representation:

We

can see that NN is now categorised into "MN" (as the given tag_name).

So,

whenever we need to categorise different tags into one tag, we can use chunking

for this purpose

Stop Words

Stop

words are such words which are very common in occurrence such as ‘a’, ’an’,

’the’, ’is’, ‘at’ etc. Stop words are known as connecting words. We ignore such

words during the preprocessing part since they do not give any important

information and would just take additional space. We can make our custom list

of stop words as well if we want. Different libraries have different stop words

list.

Let’s

see the some of the stop words list for NLTK:

from nltk.corpus import stopwords

stop_words = stopwords.words('english')

# print first 10 stop words

stop_words[:10]Output:

['i', 'me', 'my', 'myself', 'we', 'our', 'ours', 'ourselves', 'you', "you're"]If you want

to see all the stop words list you have to change the indexing in the last

line.

Let’s see Punctuation available in NLTK

We

also have punctuations which we can ignore from our set of words just like stop-words.

import string

punct = string.punctuation

punctOutput:

'!"#$%&\'()*+,-./:;<=>?@[\\]^_`{|}~'The

above shown punctuation are available to be used in NLTK package.

Let's word tokenize

the taken example after we removing the stopwords and punctuation.

import nltk

import string

from nltk.corpus import stopwords

stop_words = stopwords.words('english')

punct =string.punctuation

data1 = data

clean_data =[]

for word in nltk.word_tokenize(data1):

if word not in punct:

if word not in stop_words:

clean_data.append(word)

clean_dataOutput:

['Data', 'science', 'inter-disciplinary', 'field', 'uses', 'scientific',

'methods', 'processes', 'algorithms', 'systems', 'extract', 'knowledge',

'insights', 'many', 'structural', 'unstructured', 'data', 'Data', 'science',

'related', 'data', 'mining', 'machine', 'learning', 'big', 'data', 'Data',

'science', 'concept', 'unify', 'statistics', 'data', 'analysis', 'machine',

‘learning', 'domain', 'knowledge', 'related', 'methods', 'order', 'understand',

'analyze', 'actual', 'phenomena', 'data', 'It', 'uses', 'techniques', 'theories',

'drawn', 'many', 'fields', 'within', 'context', 'mathematics', 'statistics', 'computer',

'science', 'domain', 'knowledge', 'information', 'science', 'Turing', 'award', 'winner',

'Jim', 'Gray', 'imagined', 'data', 'science', 'fourth', 'paradigm', 'science', 'empirical',

'theoretical', 'computational', 'data-driven', 'asserted', 'everything', 'science',

'changing', 'impact', 'information', 'technology', 'data', 'deluge']That’s Great!! Our data looks so

much cleaner now after removing stop words and punctuation.

Hope,

this clears up why we should remove stop words and punctuation before

processing our data.

Let's

see pos tagging for our cleaned data:

nltk.pos_tag(clean_data)Output

[('Data', 'NNP'),

('science', 'NN'),

('inter-disciplinary', 'JJ'),

('field', 'NN'),

('uses', 'VBZ'),

('scientific', 'JJ'),

('methods', 'NNS'),

('processes', 'VBZ'),

('algorithms', 'JJ'),

('systems', 'NNS'),

('extract', 'JJ'),

('knowledge', 'NNP'),

('insights', 'NNS'),

('many', 'JJ'),

('structural', 'JJ'),

('unstructured', 'JJ'),

('data', 'NNS'),

('Data', 'NNS'),

('science', 'NN'),

('related', 'VBN'),

('data', 'NNS'),

('mining', 'NN'),

('machine', 'NN'),

('learning', 'VBG'),

('big', 'JJ'),

('data', 'NNS'),

('Data', 'NNP'),

('science', 'NN'),

('concept', 'NN'),

('unify', 'JJ'),

('statistics', 'NNS'),

('data', 'NNS'),

('analysis', 'NN'),

('machine', 'NN'),

('learning', 'VBG'),

('domain', 'NN'),

('knowledge', 'NN'),

('related', 'VBN'),

('methods', 'NNS'),

('order', 'NN'),

('understand', 'VBP'),

('analyze', 'NN'),

('actual', 'JJ'),

('phenomena', 'NN'),

('data', 'NNS'),

('It', 'PRP'),

('uses', 'VBZ'),

('techniques', 'NNS'),

('theories', 'NNS'),

('drawn', 'VBP'),

('many', 'JJ'),

('fields', 'NNS'),

('within', 'IN'),

('context', 'NN'),

('mathematics', 'NNS'),

('statistics', 'NNS'),

('computer', 'NN'),

('science', 'NN'),

('domain', 'NN'),

('knowledge', 'NN'),

('information', 'NN'),

('science', 'NN'),

('Turing', 'NNP'),

('award', 'NN'),

('winner', 'NN'),

('Jim', 'NNP'),

('Gray', 'NNP'),

('imagined', 'VBD'),

('data', 'NNS'),

('science', 'NN'),

('fourth', 'JJ'),

('paradigm', 'NN'),

('science', 'NN'),

('empirical', 'JJ'),

('theoretical', 'JJ'),

('computational', 'JJ'),

('data-driven', 'JJ'),

('asserted', 'VBD'),

('everything', 'NN'),

('science', 'NN'),

('changing', 'VBG'),

('impact', 'JJ'),

('information', 'NN'),

('technology', 'NN'),

('data', 'NNS'),

('deluge', 'NN')]

From

above we can see how we used NLTK package and applied POS to our text data

after cleaning.

Next,

let’s see what is stemming and lemmatization and how we can apply it.

Stemming and Lemmatization

Many

words that are used in a sentence are not always used in their basic form but

are used as per the rules of grammar e.g.

playing ---> play (base word)

plays---> play (base word)

play ---> play (base word)

Although,

the underlying meaning will be same but form of the base word changes to

preserve the correct grammatical meaning.

Stemming

and Lemmatization are basically used to bring such words to their basic forms,

so that the words with same base are treated as same words rather than treated

differently.

The

only difference in Stemming and Lemmatization is the way in which they change

the word to its base form.

Stemming

Stemming

means mapping a group of words to the same stem by removing prefixes or

suffixes without giving any value to the “grammatical meaning” of the stem

formed after the process.

e.g.

computation --> comput

computer --> comput

hobbies --> hobbi

We

can see that stemming tries to bring the word back to their base word but the

base word may or may not have correct grammatical meanings.

There

are typically two types of stemmers available in NLTK package.

1) Porter Stemmer

2) Lancaster Stemmer

Let’s

see how to use both of them:

from nltk.stem import PorterStemmer

from nltk.stem import LancasterStemmer, SnowballStemmer

lancaster = LancasterStemmer()

porter = PorterStemmer()

Snowball = SnowballStemmer("english")

print('Porter stemmer')

print(porter.stem("hobby"))

print(porter.stem("hobbies"))

print(porter.stem("computer"))

print(porter.stem("computation"))

print("------------------------------------")

print('lancaster stemmer')

print(lancaster.stem("hobby"))

print(lancaster.stem("hobbies"))

print(lancaster.stem("computer"))

print(porter.stem("computation"))

print("------------------------------------")

print('Snowball stemmer')

print(Snowball.stem("hobby"))

print(Snowball.stem("hobbies"))

print(Snowball.stem("computer"))

print(Snowball.stem("computation"))Output:

Porter stemmer

hobbi

hobbi

comput

comput

------------------------------------

lancaster stemmer

hobby

hobby

comput

comput

------------------------------------

Snowball stemmer

hobbi

hobbi

comput

comput

Lancaster

algorithm is faster than porter but it is more complex. Porter stemmer is the

oldest algorithm present and was the most popular to use.

Snowball

stemmer, also known as porter2, is the updated version of the Porter stemmer

and is currently the most popular stemming algorithm.

Snowball

stemmer is available for multiple languages as well.

print(porter.stem("playing"))

print(porter.stem("plays"))

print(porter.stem("play"))Output:

play

play

play

Lemmatization

Lemmatization

also does the same thing as stemming and try to bring a word to its base form,

but unlike stemming it do keep in account the actual meaning of the base word

i.e. the base word belongs to any specific language. The ‘base word’ is known

as ‘Lemma’.

We

use WordNet Lemmatizer for Lemmatization in nltk.

from nltk.stem import WordNetLemmatizer

lemma = WordNetLemmatizer()

print(lemma.lemmatize('playing'))

print(lemma.lemmatize('plays'))

print(lemma.lemmatize('play'))Output

playing

play

play

Here, we can see the lemma has changed for the words with same

base.

This is because, we haven’t given any context to the Lemmatizer.

Generally, it is given by passing the POS tags for the words in a

sentence. e.g.

print(lemma.lemmatize('playing',pos='v'))

print(lemma.lemmatize('plays',pos='v'))

print(lemma.lemmatize('play',pos='v'))Output

play

play

play

Lemmatizer is very complex and takes a lot of time to calculate. So, it should only when the real meaning of words or the context is necessary for processing, else stemming should be preferred. It completely depends on the type of problem you are trying to solve.

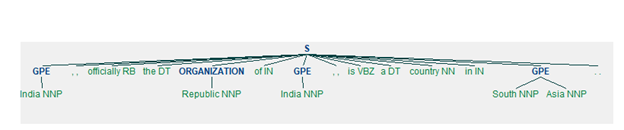

Named Entity Recognition (NER)

In

chunking, we read that we can set rules to keep different POS tags under one

single user defined tag. One such form of chunking in NLP is known as Named

Entity Recognition.

In

NER, we try to group entities like people, places, countries, things etc.

together.

text_data = "India,

officially the Republic of India, is a country in South Asia."

words = nltk.word_tokenize(text_data)

pos_tag = nltk.pos_tag(words)

namedEntity = nltk.ne_chunk(pos_tag)

print(namedEntity)

namedEntity.draw()Outlet:

(S

(GPE India/NNP)

,/,

officially/RB

the/DT

(ORGANIZATION Republic/NNP)

of/IN

(GPE India/NNP)

,/,

is/VBZ

a/DT

country/NN

in/IN

(GPE South/NNP Asia/NNP)

./.)

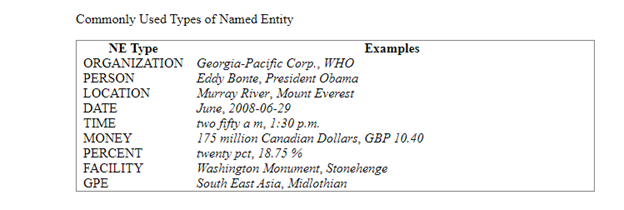

Graph

List of commonly used Named Entity

With the help of NER, we can select any particular category

from a given word document or sentence. Suppose we need all the names mentioned

in a document, we can use NER and select the words with tag "Person".

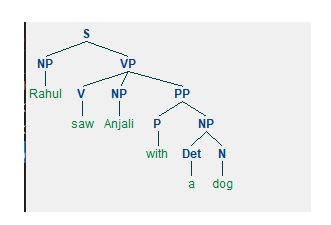

Parsing

Parsing

is the process of determining the structure of a given a text on the basis of a

given grammatical rule.

e.g.

- Divide a sentence as Noun_phrase and Verb_phrase.

- Break Noun_phrase further in Proper noun, determiner, noun

- Break Verb_phrase in verb, noun_phrase

These

are set of grammatical rules we will use to parse a given text.

text

= " Ram ate a mango."

- Proper_noun = Ram

- Noun = mango

- determiner = a

- verb = ate

Once

you pass the above-mentioned set of grammatical rules in a parser. It will

break the given "text" on the basis of it and output like this:

Noun_Phrase(

Proper_Noun Ram) , Verb_Phrase(verb (ate), noun_phrase( determiner(a),

noun(mango))

Such

type of parser is called a Recursive Parser.

There

are many different types of Parsers available in Nltk library. Each one has a

different use-case and can be used on the basis of requirement.

Let’s

print NLTK parser list

dir(nltk.parse)

Output

['BllipParser',

'BottomUpChartParser',

'BottomUpLeftCornerChartParser',

'BottomUpProbabilisticChartParser',

'ChartParser',

'CoreNLPDependencyParser',

'CoreNLPParser',

'DependencyEvaluator',

'DependencyGraph',

'EarleyChartParser',

'FeatureBottomUpChartParser',

'FeatureBottomUpLeftCornerChartParser',

'FeatureChartParser',

'FeatureEarleyChartParser',

'FeatureIncrementalBottomUpChartParser',

'FeatureIncrementalBottomUpLeftCornerChartParser',

'FeatureIncrementalChartParser',

'FeatureIncrementalTopDownChartParser',

'FeatureTopDownChartParser',

'IncrementalBottomUpChartParser',

'IncrementalBottomUpLeftCornerChartParser',

'IncrementalChartParser',

'IncrementalLeftCornerChartParser',

'IncrementalTopDownChartParser',

'InsideChartParser',

'LeftCornerChartParser',

'LongestChartParser',

'MaltParser',

'NaiveBayesDependencyScorer',

'NonprojectiveDependencyParser',

'ParserI',

'ProbabilisticNonprojectiveParser',

'ProbabilisticProjectiveDependencyParser',

'ProjectiveDependencyParser',

'RandomChartParser',

'RecursiveDescentParser',

'ShiftReduceParser',

'SteppingChartParser',

'SteppingRecursiveDescentParser',

'SteppingShiftReduceParser',

'TestGrammar',

'TopDownChartParser',

'TransitionParser',

'UnsortedChartParser',

'ViterbiParser',

'__builtins__',

'__cached__',

'__doc__',

'__file__',

'__loader__',

'__name__',

'__package__',

'__path__',

'__spec__',

'api',

'bllip',

'chart',

'corenlp',

'dependencygraph',

'earleychart',

'evaluate',

'extract_test_sentences',

'featurechart',

'load_parser',

'malt',

'nonprojectivedependencyparser',

'pchart',

'projectivedependencyparser',

'recursivedescent',

'shiftreduce',

'transitionparser',

'util',

'viterbi']

# Grammer list

grammar = nltk.CFG.fromstring("""

S -> NP VP

VP -> V NP | V NP PP

PP -> P NP

V -> "saw" | "slept" | "walked"

NP -> "Rahul" | "Anjali" | Det N | Det N PP

Det -> "a" | "an" | "the" | "my"

N -> "man" | "dog" | "cat" | "telescope" | "park"

P -> "in" | "on" | "by" | "with"

""")

Let’s

print it out and see with the help of graph

text = "Rahul saw Anjali with a dog".split()

parser = nltk.RecursiveDescentParser(grammar)

for tree in parser.parse(sent):

print(tree)

tree.draw()Output

(S

(NP Rahul)

(VP (V saw) (NP Anjali) (PP (P with) (NP (Det a) (N dog)))))

Let's see the graph of the grammar

I hope after reading this article, finally, you came to know about

what is NLP and NLTK package, different terminologies used in NLP?

In the next articles, I will come with a detailed explanation

of some other type of NLP package such as spacy, genism etc. For more

blogs/courses on data science, machine learning, artificial intelligence and

new technologies do visit us at InsideAIML.

Thanks for reading…